Tensors for the Tonsorially Challenged

Randomness is all well and good, but there are days when we wish for some control over our lives, or at least our G'MIC installations. The question is, can we plot these swirling curves so that they flow in particular directions?

Randomness is all well and good, but there are days when we wish for some control over our lives, or at least our G'MIC installations. The question is, can we plot these swirling curves so that they flow in particular directions?And – of course – the answer is “Yes!!!” Because that's exactly the problem which the anisotropic smoothing bits have to figure out when they are composing a tensor field. At the end of the day, smooth gets a tensor field which tells it exactly how to directionally blur an image. It's behavior is being managed in a precise way.

Since diffusiontensors knows how to write those kind of instructions, our course seems to run along the lines of preparing edges or lines in the patterns we'd like to make. The anisotropic smoothing bits will detect the lines and edges in these “guidelines” and build a diffusion tensor field for smooth. We'll then give smooth an entirely different image to work on. Noise, of course, can be an ideal basis for making hair.

Hairlocks

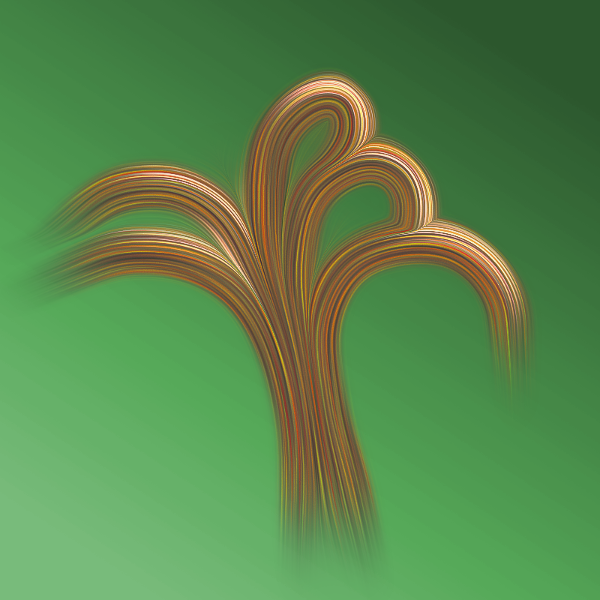

A lock of hair is an example of “directed noise”. A pretty simple hair model consists of noise that diffuses along particular curved paths, the individual bits of noise elongating into curved strands of hair, reminiscent of the directional blur we've seen on previous pages.Of course, this model leaves out a lot: specular highlights and subsurface scattering, but, if we're not that hell-bent on photo realistic rendering, then perhaps a coarse, first order approximation serves our purposes.

Setup

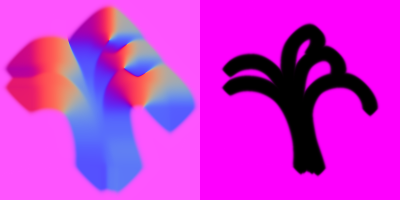

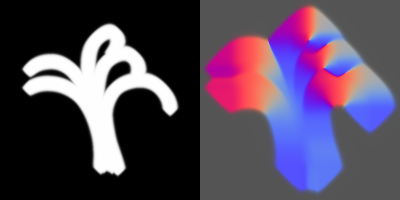

We begin with simple guide marks. We do not need many and almost any source will do – scanned in felt pen or brush strokes serve as well as anything else.We use Inkscape because we're partial to the Interpolated sub-paths tool in the Path Effects editor. We:

| 1. | Make a curve |

| 2. | Copy and flip it |

| 3. | Move the curves apart and shape them by hand. |

| 4. | Combine the segments into into one SVG path and use the path interpolation tool to estimate three intermediaries. |

| 5. | Convert back to sub-paths and break apart for any minor tweaking which aesthetic sensibilities might require. |

| 6. | This file kicks off the G'MIC pipeline. Look for hairlock_unit.png downstream. |

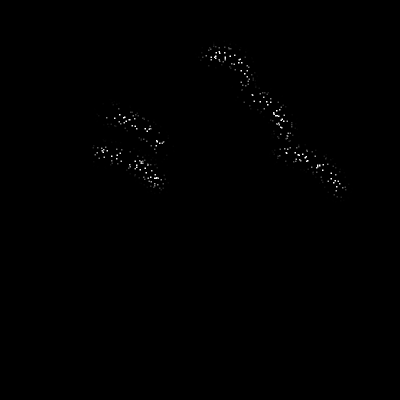

While specular highlights and sub-surface scattering may be beyond our reach, we can get a rough approximating of such with sparse noise. The aim here is to smooth such noise along with a similar noise generating the hair itself.

To that end, we open a layer above our guidelines and make some arcs, positioning them roughly where we think specular high lights should appear. We invert the art and blur it, making a multiplier mask. Downstream, we multiply this mask with sparse noise and smooth that pattern along with the hair. See hairlock_hlight.png downstream.

G'MIC Pipeline: Customizing a Tensor Field

The G'MIC command diffusiontensors does everything that smooth does except actually smooth an image. Instead, it produces a tensor field which smooth uses to remove noise anisotropically. Here, we'll invoke diffusiontensors ourselves and unpack its output tensor field, performing some custom edits on EigenOne and EigenTwo.gmic -input hairlock_unit.png

--diffusiontensors[-1] 1,0,0.1,4,0

-diffusiontensors[-2] 0,1,0.1,10,0

--diffusiontensors[-1] 1,0,0.1,4,0

-diffusiontensors[-2] 0,1,0.1,10,0

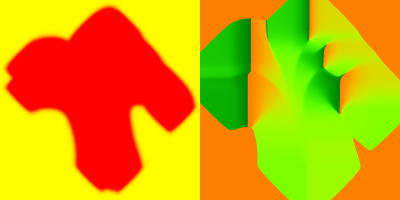

| We invoke diffusiontensors twice, but mainly work with the left hand dataset. We generated this with sharpness set to zero and anisotropy set to one which engenders broadly sweeping smoothing patterns. Along that line, we also set the alpha parameter to as near zero as we could stand and the sigma parameter to a high value. Behind the scenes, diffusiontensors calls structuretensors, first pre-blurring the operand image, the Gaussian variation set by the alpha parameter. This slight blurring tends to erode noise more significantly than coherent edges, which helps diffusiontensors identify edges. diffusiontensors also blurs the tensor field produced by structuretensors, here by the amount -sigma. This tends to suppress small scale variations in local directions, favoring large-scale laminar flow. We invoke diffusiontensors a second time to harvest a mask from it that handily sets the region of the blurring pattern we deem valid, something of a heuristic judgment. We set the width of this mask by way of the -sigma parameter passed to this second invocation. We convert this mask into a single channel gray scale that will serve as the final image's alpha channel. |

-eigen[-2,-1] -rm[-1] \

-split[-1] c -rm[-1] \

-mul[-1] -1 -add[-1] 1 \

-normalize[-1] 0,255 -o[-1] hairmask.png \

-mv[-1] 0 \

-split[-1] c -rm[-1] \

-mul[-1] -1 -add[-1] 1 \

-normalize[-1] 0,255 -o[-1] hairmask.png \

-mv[-1] 0 \

| We edit the right hand dataset to extract the mask in which we are interested, deleting all but EigenOne, which, providentially selects a region centered on our original guidelines. We save this mask in a file and also move it to the first slot in the image list; it will be the alpha mask of the final image. |

-split[-2] c \

-rm[-3] \

-mul[-2] -1 \

-add[-2] 1 \

--mul[-2] 0 \

-reverse[-1,-2] \

-append[-3,-2] c \

-rm[-3] \

-mul[-2] -1 \

-add[-2] 1 \

--mul[-2] 0 \

-reverse[-1,-2] \

-append[-3,-2] c \

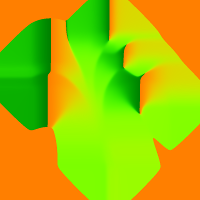

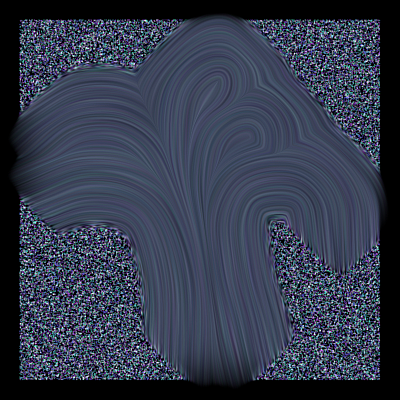

| We modify EigenOne and EigenTwo so that the only region smooth will modify are those around our guidelines. We found the reverse of EigenTwo preferable as EigenOne, setting EigenTwo to constant zero. When harnessing strong directional blur, as we are doing here, Our wont is to set the magnitude of blurring entirely through EigenOne, keeping EigenTwo at zero. We harness EigenTwo primarily to reduce the eccentricity of the blurring ellipse. |

-eigen2tensor[-2,-1] \

-o[-1] hairlock_tensor.cimg \

-o[-1] hairlock_tensor.cimg \

| With our edits complete we fold up the control datasets into a tensor field, but have no images to blur. We conjure these from the aether in the next section. |

GMIC Pipeline: Noise Generation.

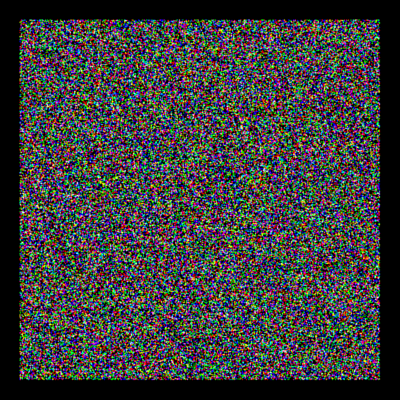

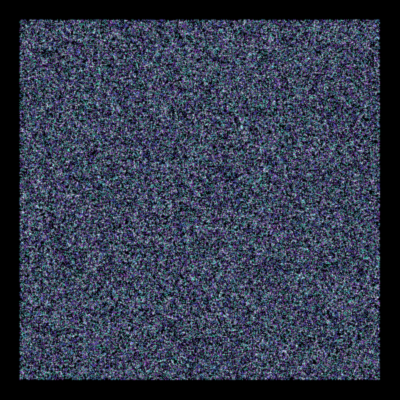

We model the hair with salt-and-pepper noise, upon which smooth performs a strong directional blur. Most of the following concerns itself with coloring a “slightly coalesced” noise, that is, a noise morphologically dilated, giving rise to thicker “hair strands” which can survive a certain amount of image shrinking.We set the color of the hair by moving the noise into a Hue-Saturation-Lightness color space and manipulating the channels. Much of this work is moot, however, as at the end of the day we find ourselves readjusting the color work in Gimp.

Probably the most useful aspect of this segment is not so much the particular hue that we set, but the range over which the noise spans from the central color. This gives rise to variational streaking.

90%,90%,1,3 \

-noise[-1] 16,2 \

-resize[-1] [-2],[-2],[-1],[-1],0,0,0.5,0.5,0.5,0.5 \

-dilate_circ[-1] 2 \

-blur[-1] 1,1,1 \

-normalize[-1] 0,255 \

-noise[-1] 16,2 \

-resize[-1] [-2],[-2],[-1],[-1],0,0,0.5,0.5,0.5,0.5 \

-dilate_circ[-1] 2 \

-blur[-1] 1,1,1 \

-normalize[-1] 0,255 \

| Slightly dilated salt and pepper noise is the foundation for hair. We infill a region offset from the edges a bit because smooth has difficulty altering near-edge pixels, hence a black buffer zone. The amount of dilation here sets the coarseness of the hair; it is a candidate parameter of some gedanken gimp-gmic filter. |

-rgb2hsl[-1] \

-split[-1] c \

-mul[-3] 0.3 \

-add[-3] 170.0 \

-mul[-2] 0.4 \

-mul[-1] 0.1 \

-append[-3,-2,-1] c \

-hsl2rgb[-1] \

-mv[-1] 0 \

-split[-1] c \

-mul[-3] 0.3 \

-add[-3] 170.0 \

-mul[-2] 0.4 \

-mul[-1] 0.1 \

-append[-3,-2,-1] c \

-hsl2rgb[-1] \

-mv[-1] 0 \

| We colorize the hair noise by moving to the Hue-Saturation-Lightness color model and largely scaling and offsetting the Hue dataset. These particular machinations set a blue-gray hair color, but are moot. With final compositional efforts debouching into Gimp or similar tools, there are ample downstream opportunities to set color elsewhere. |

hairlock_hilite.png \

-normalize[-1] 0,1 \

100%,100%,1,1 \

-noise[-1] 2,2 \

-mul[-2,-1] \

-mv[-1] 0 \

-normalize[-1] 0,1 \

100%,100%,1,1 \

-noise[-1] 2,2 \

-mul[-2,-1] \

-mv[-1] 0 \

| Here, we generate a rough approximation of “spectral highlights” harnessing a mask we originally set up in Inkscape. We multiply a gray scale channel of sparse salt-and-pepper noise and multiply with the mask that we created during our initial Inkscape session. |

G'MIC Pipeline: Smoothing Everything

-repeat 3 \

-smooth[-4,-3,-2] [-1],500 \

-done \

-normalize[-4,-3,-2] 0,255 \

-sharpen[-4] 6,0 \

-rm[-1] \

-append[-2,-1] c \

-output[-1] hairlock_final.png \

-output[-2] hairlock_gfinal.png

-smooth[-4,-3,-2] [-1],500 \

-done \

-normalize[-4,-3,-2] 0,255 \

-sharpen[-4] 6,0 \

-rm[-1] \

-append[-2,-1] c \

-output[-1] hairlock_final.png \

-output[-2] hairlock_gfinal.png

| At last, we invoke smooth. You probably thought we'd never get here. We harness the tensor field composed in the first part of the G'MIC pipeline to smooth the spectral highlights, body of the hair, and the selection mask. |

| The base color of the hair arises from smoothing the denser, colored salt-and-pepper noise |

| We use this image as the alpha channel of the hairlock. Recall that we extracted this from a dataset produced by a -diffusiontensors command near the top of the pipeline. |

Final Composition

| To test our concept, we overlapped the original guidelines on the hair lock. The tracking is quite faithful, almost too faithful, as real hair will have strands here and there migrating around. Steps toward more hairlike hair could entail multiple passes with sparse noise. If we warp the guidelines slightly with each pass, the resulting hair will crisscross. Furthermore, we would have more layers to composite, with opportunities of making shadow layers. In other words, with a greater investment of time and thought, we'll have a cure for pattern baldness. |

Code in this tutorial has been implemented as a Gimp-GMIC Filter. See The Hair lock Filter.

Home

Home Download

Download News

News Mastodon

Mastodon Bluesky

Bluesky X

X Summary - 17 Years

Summary - 17 Years Summary - 16 Years

Summary - 16 Years Summary - 15 Years

Summary - 15 Years Summary - 13 Years

Summary - 13 Years Summary - 11 Years

Summary - 11 Years Summary - 10 Years

Summary - 10 Years Resources

Resources Technical Reference

Technical Reference Scripting Tutorial

Scripting Tutorial Video Tutorials

Video Tutorials Wiki Pages

Wiki Pages Image Gallery

Image Gallery Color Presets

Color Presets Using libgmic

Using libgmic G'MIC Online

G'MIC Online Community

Community Discussion Forum (Pixls.us)

Discussion Forum (Pixls.us) GimpChat

GimpChat IRC

IRC Report Issue

Report Issue